Polling: What Can We Learn From the UK?

While I do not normally follow British politics, I am interested in the opinion polling around their May elections. Polling is heavily relied on during campaigns so candidates know where they stand with the public and where they could improve. It also provides content for desperate reporters and news agencies looking to fill time and column inches.

The accuracy of the pre-election polls are important not only for people who want to know who is currently in the lead, but also for political campaigns searching for an edge for their candidate.

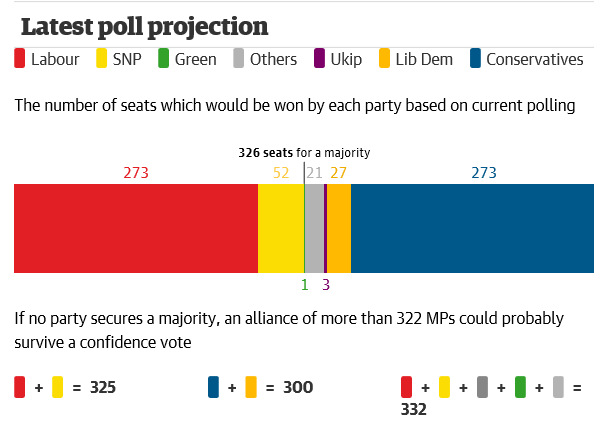

The opinion polls leading up to this year’s UK elections were particularly inaccurate. Nearly every popular poll had the conservative and labour parties placed within one percent of each other. The polls indicated that this election would likely be “hung” and that no party would have majority seating in the UK’s parliamentary system.

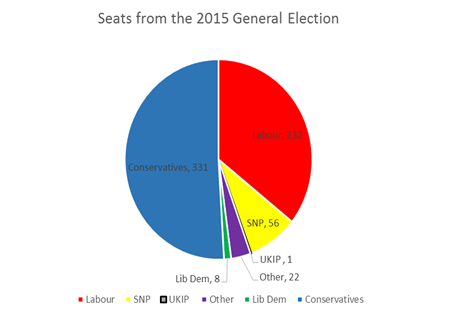

What actually happened is that the conservative party won the, albeit slight, majority of seats. The conservatives, led by David Cameron, secured 331 seats, which puts them in the majority (majority is considered 326 seats). Labour secured 232 seats, Scottish National Party (SNP) secured 56 seats, the Liberal Democrats retained 8 seats, United Kingdom Independence Party (UKIP) now has 1 seat, and other parties make up 22 seats.

Clearly, what actually happened is very different from the neck-and-neck dead heat that the polls predicted.

So, what happened? What went wrong with the polling?

Multiple sources (FiveThirtyEight, Telegraph, and The Conversation) have ascribed the misses to a failure of sufficiently accounting for the documented late swing towards the incumbent party (the Conservatives). This swing is something that traditionally happens in UK elections. According to Leighton Vaughan Williams’s article on The Conversation, another problem involved an overestimation of the amount of people that would be voting. The Conversation also points to the methodology of pollsters. Pollsters in most of these polls only supplied party names (conservative, labour, etc) instead of actual candidate names, which tends to “miss a lot of late tactical vote switching.” The late swing of votes, inaccuracies in voter turnout, and issues with the pollsters’ methodology account for possibilities of why the pollsters were so inaccurate.

Granted, polling UK voters is a historically difficult task. Polls in the 1992 election were more inaccurate than this election and history repeated itself in 2015.

So, what does this mean for the future? Is this a harbinger for our elections in 2016?

It’s no secret that traditional polling methods are quickly becoming outdated. According to MPR news, political polling is evolving to monitor social media usage along with social media analytics. Another type of emerging technology in campaigns is biometrics.

While some countries have started to use biometrics at polling stations to help with voter identification, biometrics has the potential to be more. Using biometrics for polling purposes can help the system be more effective since it measures how much a specific person agrees with a statement, question, or wants to vote for a candidate. Even though this technology is new and still in development stages, I think it will change the accuracy and landscape of campaign research. The US presidential race of 2016 is sure to demonstrate some new polling methods, and it will be a good opportunity to observe what does and does not work in a rapidly changing industry.